Video Game development has unique constraints that lead to its build systems lagging behind “enterprise” development.

A primary factor is size. Game engines are massive. It’s common for a game repository to exceed 2tb in size. A game artifact might exceed 100gb. With these sizes, even the simplest of actions in your CI/CD pipeline can take tens of minutes, if not hours.

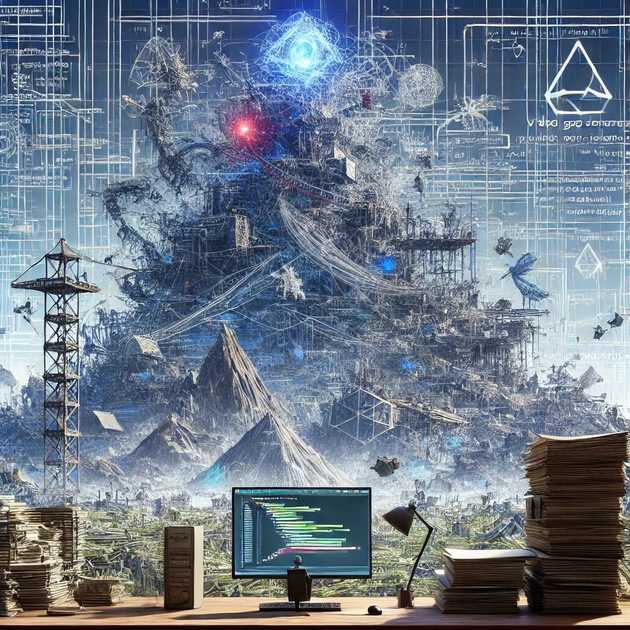

How do game companies overcome these challenges? Usually by aggressively caching everything. If a clean pull of your repo takes over an hour, you’re incentivized to maintain stateful build agents and do incremental builds. If a build agent needs over 2tb per branch for its workspace, you’re incentivized to use separate agents for separate branches. Build Engineers might get creative in hopes of speeding things up, but they must always be wary of causing subtle issues. For example, we can build the code and cook the assets in parallel, but not if there are any dependencies between the two.

Ultimately these constraints may dictate how your build system works. If your build agents are stateful, you probably can’t spin up agents on-demand. If your agents are persistent, perhaps it makes more sense to build them on-prem instead of in an expensive cloud architecture.

What kinds of things do you do to keep your builds performant?